Untangling AWS Networks with Cloud WAN

Table of Contents

- Introduction

- Overview of Architecture

- Implementation

- Centralized Management and Visibility

- Challenges and Lessons Learned

- Key Takeaways

Originally written for GuidePoint Security and shared here with permission.

Introduction

As organizations grow so does their infrastructure, often without a well-designed underlying infrastructure to support this growth. At GuidePoint Security we interact regularly with customers looking to establish that foundation, or perhaps attempting to untangle the mess that is a growing environment which was not well structured to begin with.

Three key areas where we see this lack of structure start to affect operations are multi-account management, Identity and Access Management, and networking. Much like any problem in AWS, there are always a variety of ways to address and solve these issues where each solution may be a slightly better fit for different use cases.

Specifically, when it comes to networking, the first and most straightforward way to create connectivity between applications in separate VPC's or accounts is of course VPC peering. The limit we reach with this is that the number of peering connections required scales quadratically, so any more than a handful of VPCs and this can very quickly become unmanageable. Enter Transit Gateway. Transit Gateway's hub and spoke design allows for many VPCs to connect to a central point which simplifies the process of creating that connectivity. Undoubtedly, the addition of Transit Gateways is one of the most significant upgrades in AWS networking. However, with further growth even TGW's can become difficult to manage and centrally visualize, especially with more complex networking requirements such as centralized ingress/egress, inspection architecture, and separation between different enclaves (i.e. prod, non-prod).

Underneath the hood, AWS Cloud WAN ultimately uses managed Transit Gateways to create connectivity for your applications to the necessary resources, but by abstracting that layer a bit we can achieve some key benefits. It allows organizations to build a unified global network by automating the deployment of network components and policies. Cloud WAN enables multi-region and multi-account connectivity within AWS, as well as easing the process of connecting non-AWS environments. It also provides centralized control and visibility to simplify management. Cloud WAN also integrates nicely with both AWS native and third-party solutions to funnel both north-south and east-west traffic through inspection points. Finally, in some cases implementing Cloud WAN can also reduce expenses by facilitating shared usage of key services such as VPC endpoints and NAT gateways.

Overview of Architecture

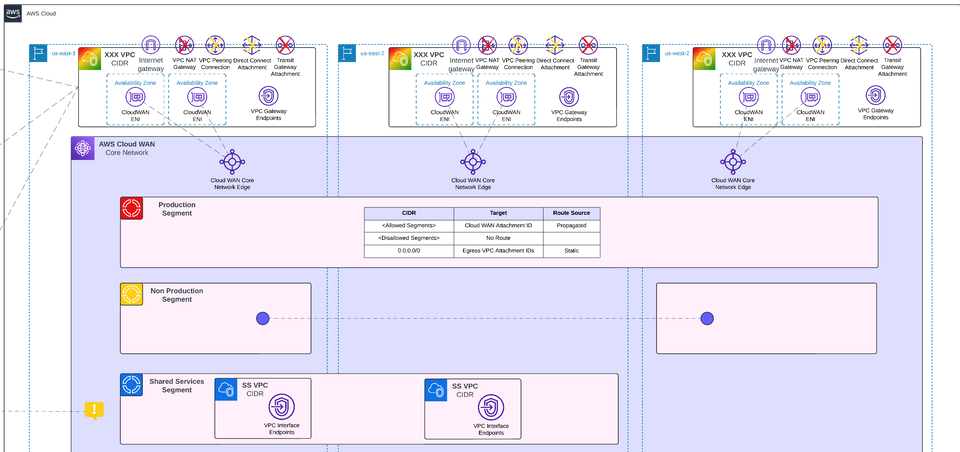

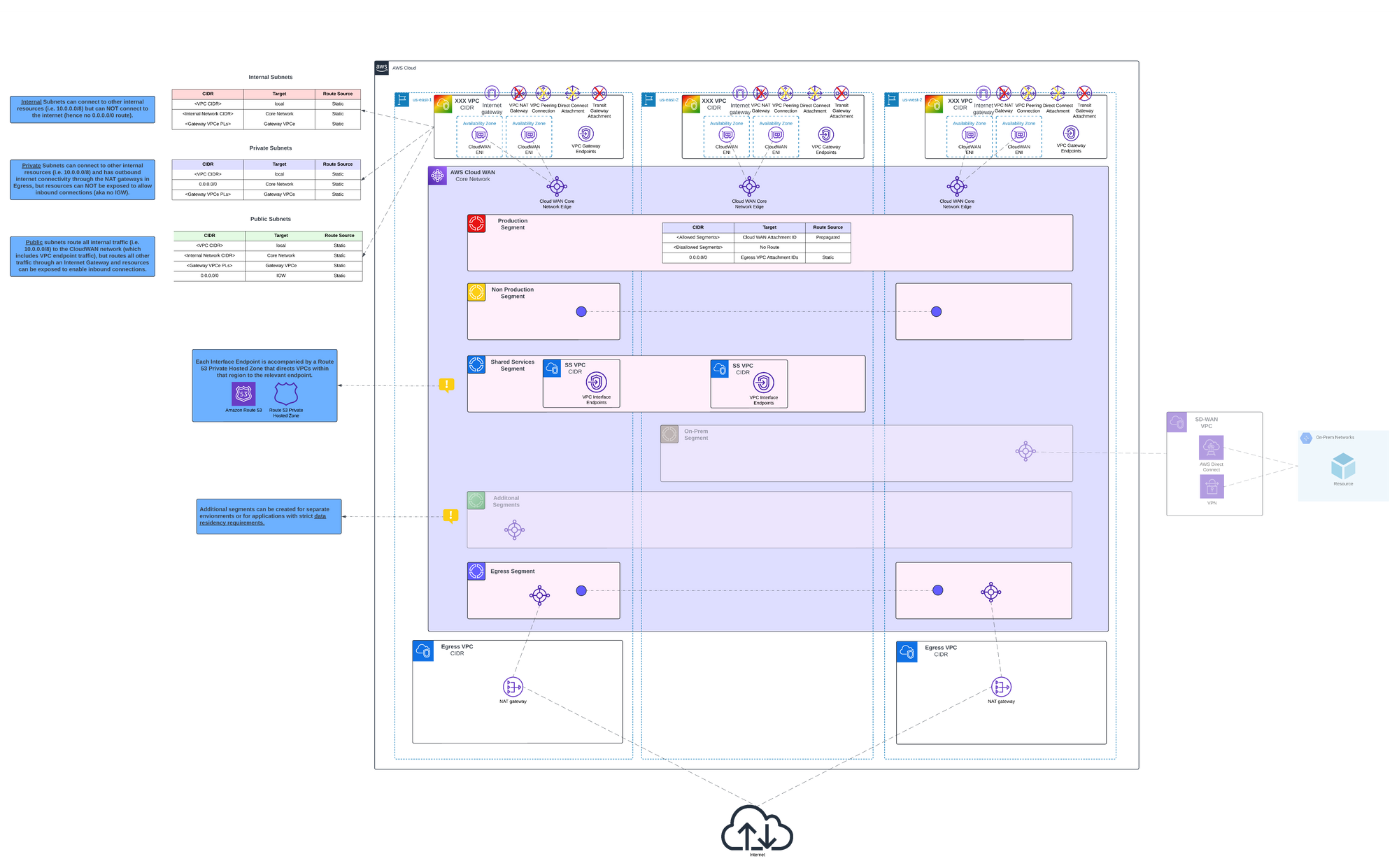

There are several key design decisions to make when architecting an AWS Cloud WAN solution. This starts with region support and segmentation strategy. A Core network edge (CNE) is deployed into each region that you choose when setting up the Cloud WAN network, and each CNE comes at a cost ($0.50 USD per hour = ~$365/month). Therefore, the regional support should be wide enough to support the organization’s needs, but no more. The next and more complex step is to design the segmentation strategy. Segments are the logical structures used to delineate enclaves connected to the network and design policies to allow or disallow connectivity between them.

Two common strategies that can be used to design segments are environment-based segmentation, and application-based segmentation. Environment-based segmentation separates segments based on the environment or stage of deployment, for example, production, development, testing, and shared services/infrastructure. Benefits of this are the ability to easily create environment specific routing and access controls, to prevent things like communication between production and non-production environments. The downside to this strategy is that by default it will allow for mesh connectivity between all systems within the same segment, so ideally it should be layered with additional firewall appliance to create more granular control over what systems and applications can connect with others and over what ports/protocols. Fortunately Cloud WAN is well designed for this type of integration through the use of Network Function Groups (NFGs).

Application-based segmentation instead separates traffic based on different applications or workloads. This allows isolation of critical applications from less critical or public-facing ones, which can limit lateral movement in case of breach. It facilitates slightly more fine-grained control over application-specific security policies without the need to layer an additional firewall appliance. However, it is not capable of unlimited scaling due to a cap of 40 segments per core network, which is not adjustable, and it can also complicate the policy design.

Generally speaking, we recommend environment-based segmentation, which is exactly what we've done for a proof of concept created to test the capabilities and limitations of AWS Cloud WAN. That said, there are a variety of factors that go into this decision which is always something that experts at GPS can help you with. Shown in the architectural diagram below, the Cloud WAN Core Network is divided into four initial segments: Production, Non-Production, Shared Services, and Egress. Common use-cases for additional segments would be a dedicated segment to facilitate connectivity to an on-premises network, and segments for environments and applications which may have strict regulatory requirements where stricter network controls can be applied to the segment without affecting all other applications. In this case Egress is set up for outbound connectivity only through a NAT gateway, but this segment would be replaced/restructured if the architecture included inspection appliances, and/or centralization of both ingress and egress.

Once you have determined your segmentation strategy the next step is to decide what segments will be allowed to talk to what other segments. A great way to organize this is a matrix which lists all segments at the top of each column and at the beginning of each row in order to mark which pathways are allowed, which pathways are blocked, and in some cases which pathways are allowed through Cloud WAN but sent to an inspection device for more fine-grained control over the traffic. This matrix is key when it comes to writing the policy definition to avoid needing to pause writing to make decisions ad-hoc. Below is the matrix designed for the proof of concept. In addition to allowing or disallowing connections between segments, this matrix can also be used to identify segments that should be isolated, meaning systems within the segment cannot communicate with each other (outside of the VPC), only systems in other allowed segments.

Implementation

For this proof of concept, we used Terraform to deploy the resources consistently and with easy setup/tear down during testing. The Terraform is split into two workspaces, one for deploying the Cloud WAN Core Network, and a second for deploying and connecting a variety of VPCs in multiple regions and segments. This two-stage deployment works well with multi-account deployments because the Core Network is still deployed and managed in a single account, where the VPC deployment can be templated and modified to be deployed per-account depending on what type of environment it is (i.e. prod, non-prod). As long as the Core Network is shared to the account, or to the organization via AWS Resource Access Manager (RAM), establishing the connection from the VPC into the Core Network is relatively easy and works the same when deployed via Terraform or other IaC languages. The allocation of each VPC attachment to a specific segment can also be automated through defining a tagging policy in the core network policy, then tagging the VPC attachment to meet the requirements of the segment that VPC is intended to be a part of.

The most complex part of deploying the Core Network is writing the policy, which is made much simpler by pre-defining connectivity requirements using the traffic enforcement matrix. In this case the policy was written so that both Production and Non-Production could communicate with Shared Services, and Egress, but not with each other. Additionally, Shared Services cannot connect to Egress, and both Shared Services and Egress are isolated to prevent lateral movements within these shared segments. After the initial deployment of VPCs (specifically the Egress VPCs) the policy is updated to create routes to the Egress VPC attachment so that private subnets within Production and Non-Production know where to route internet-bound traffic.

The Shared Services segment provides access to a variety of VPC endpoints for the Production and Non-Production segments, and could also host custom shared services to meet organizational needs. A few things need to be carefully configured for this setup to function. The first is that the VPCs must be configured to enable DNS support and DNS hostnames. This is because when a VPC Endpoint is deployed within a VPC a managed Route 53 Private Hosted Zone is created so that the DNS service within the VPC knows to route the traffic bound for those services to the dedicate VPC Endpoint instead of the public internet endpoints. When the VPC Endpoint is in a centralized VPC, in order to achieve the same routing mechanism, a managed Route 53 Private Hosted Zone must be created and shared with the VPC in question. In addition to this, the VPC endpoints must have a presence within the same Availability Zones (AZs) as the VPCs in other segments, so it's recommended to create VPCs within the Shared Services segment that include at least one subnet for every AZ within the region so that the endpoints can support all AZs within the region*. The Shared Services segment should also have at least one endpoint per service per region, because even if the additional latency of cross-region endpoint access is not an issue, in many cases it is not possible due to region specific encryption keys.

For the proof of concept, the Egress segment provides centralization of NAT Gateways for private subnets within the entire network, and unlike VPC endpoints, it is not required to have a set of NAT Gateways per region, although it is generally recommended. This is because there are no technical limitations preventing the use of cross-region NAT Gateways, however it does add measurable latency to connections. A common use case where cross-region egress would potentially be worth it is when an organization is deploying inspection appliances that are expensive to deploy into every region in which case the added latency of sending traffic cross-region through these appliances is worth the cost reduction.

The Production and Non-Production VPCs are fairly uncomplicated. They are deployed across a handful of regions and AZs, and once the VPC attachment is established, any VPC in any account is centrally visible through the account with the Cloud WAN Core Network.

* AZ's have randomized alias per account and region (i.e. us-east-1a), so you must use the AZ ID (i.e. use1-az1) to ensure proper mapping of AZ support between VPCs, especially in segments such as Shared Services.

Centralized Management and Visibility

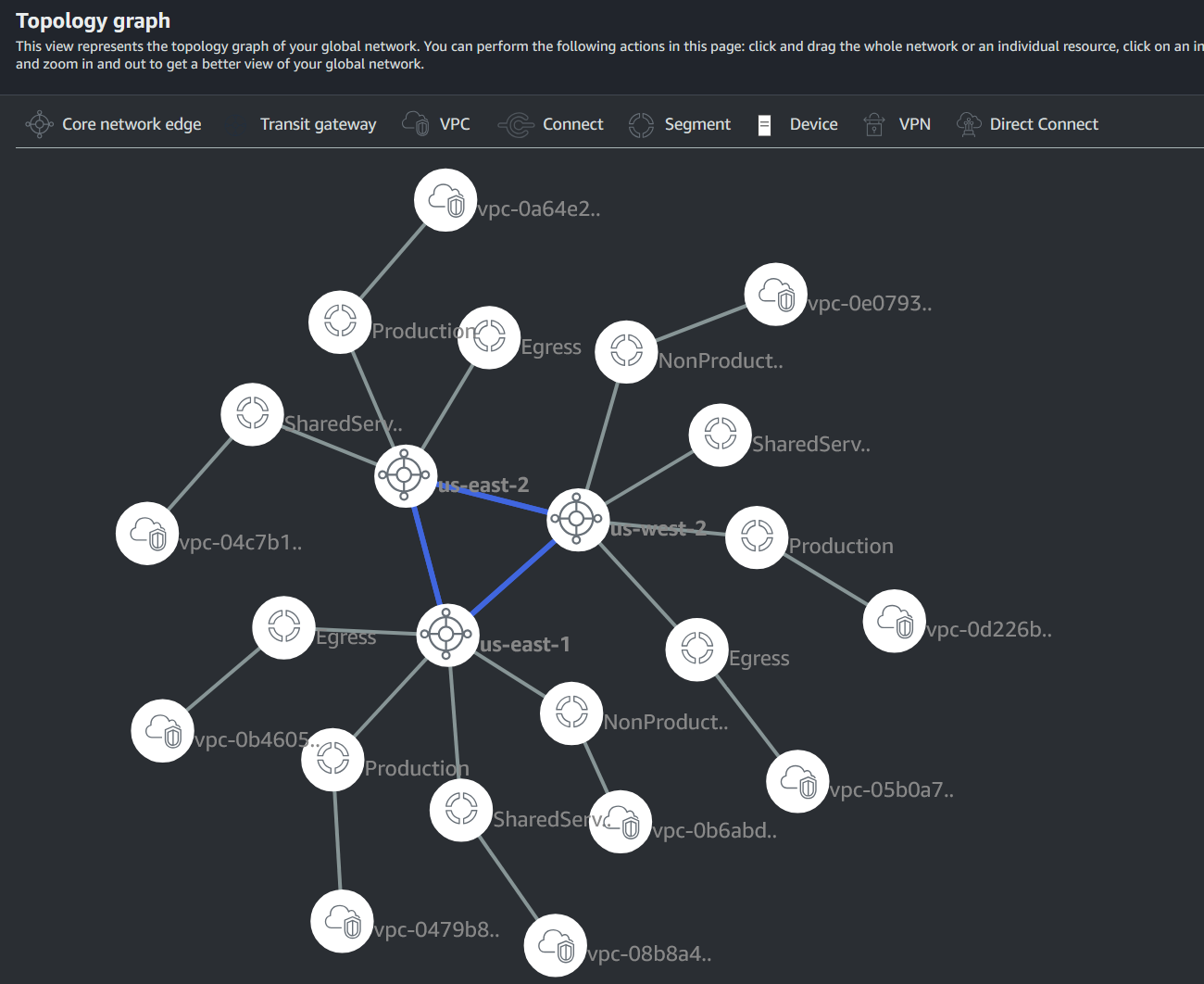

The Cloud WAN Management Interface within the AWS Console is nested within AWS Network Manager and provides two main views for visualizing the entire network. The first is a Topology graph which shows a bubble for each region, which then has bubbles for each segment that is available within that region (region-presence is configured per segment), and finally a bubble for each VPC connected to each segment within each region. Below is the Topology graph for the proof of concept deployment:

The Topology tree view shows essentially all the same information but is more structured than the Topology graph and is not moveable.

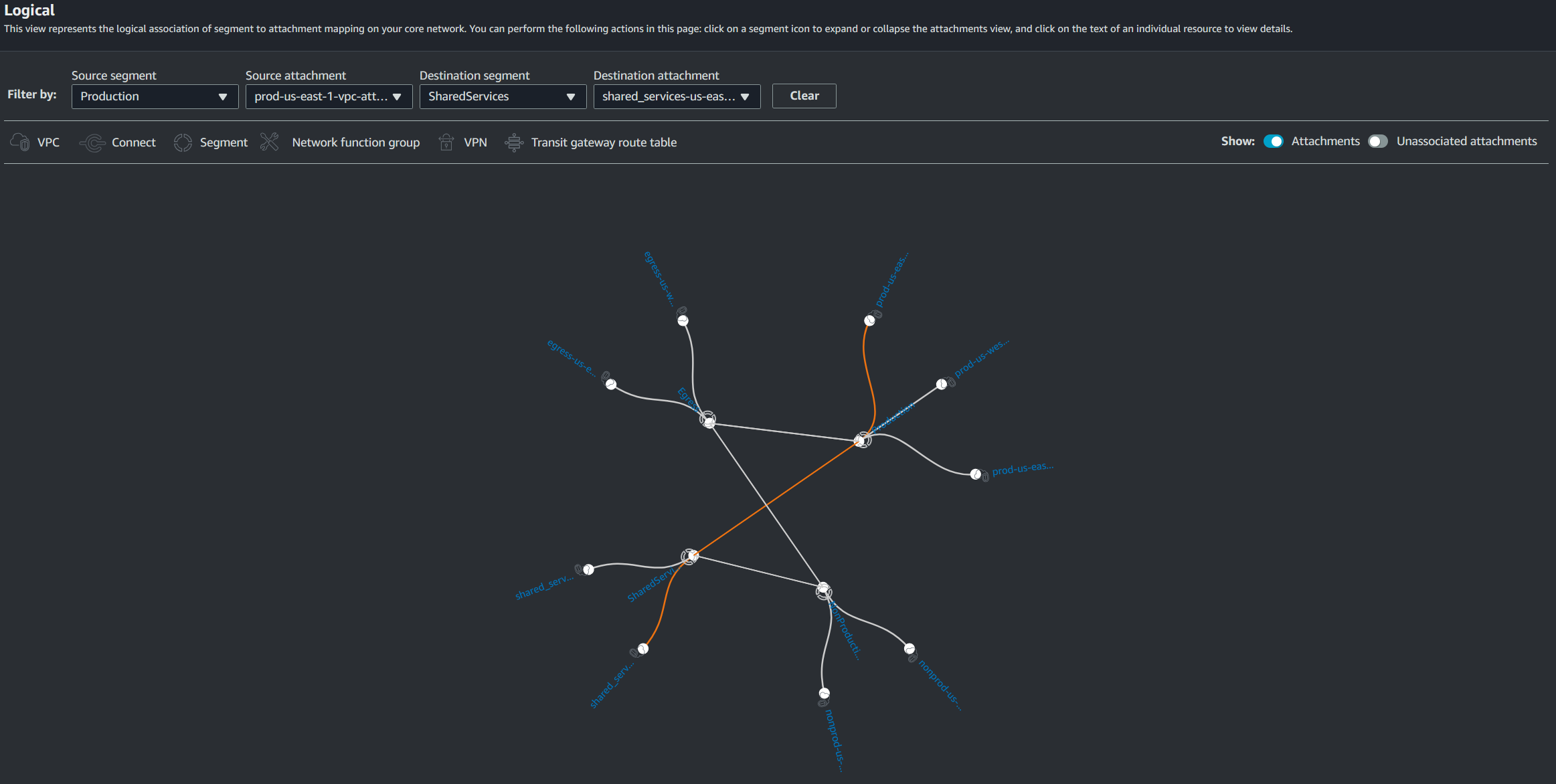

Another fantastic tool the service provides for visualizing routes is the Logical view. This tool allows you to select source and destination segments and attachments to see if VPCs have connectivity through the network, and if they do, exactly the pathway the traffic takes. For example, here is a pathway that a VPC within the Production segment takes to connect to a Shared Services VPC. In this image we can see the orange line which depicts an uninterrupted pathway between the prod-us-east-1-vpc-attachment and the shared_services-us-east-1-vpc-attachment.

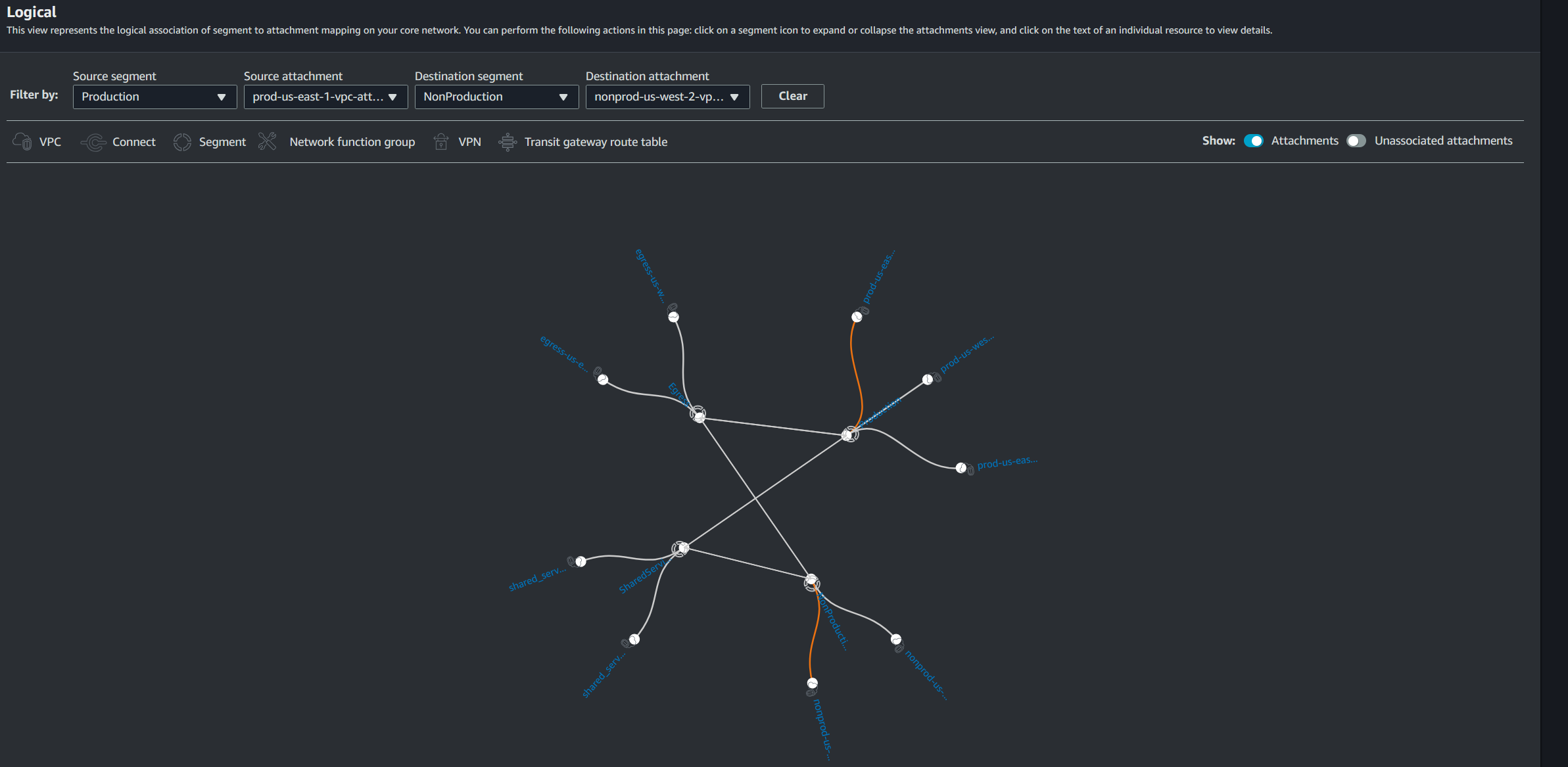

Conversely, here is an image that shows that the attempted connection between a Production VPC and a Non-Production VPC that is interrupted by the lack of a route between the segments. This brings up an important concept which is that Cloud WAN does not have an explicit DENY when defining the core network policy. Instead, all connections are an implicit deny unless explicitly allowed. When traffic attempts to connect to a resource in a segment that is not allowed it is simply dropped because there is no route created between those segments. In this case the orange line shows that each of the VPC at either end of this connection can reach their own segment core, but the segments cannot reach each other. Shared segments such as Egress and Shared Services are also not transitive, so they cannot be used to facilitate a pathway to a segment that is not directly attached. A blackhole route can also be used to explicitly deny specific IPs or IP ranges.

This tool is not only useful for validating intended connectivity and structure of the network, but also beneficial in troubleshooting when resources should have connectivity and don't. As a note here, the AWS Network Reachability Analyzer tool unfortunately does not support Cloud WAN resources (as of the writing of this article). If AWS does eventually add support for tracing traffic through the Cloud WAN network, I think it would be very beneficial along with this tool for troubleshooting and validating configurations.

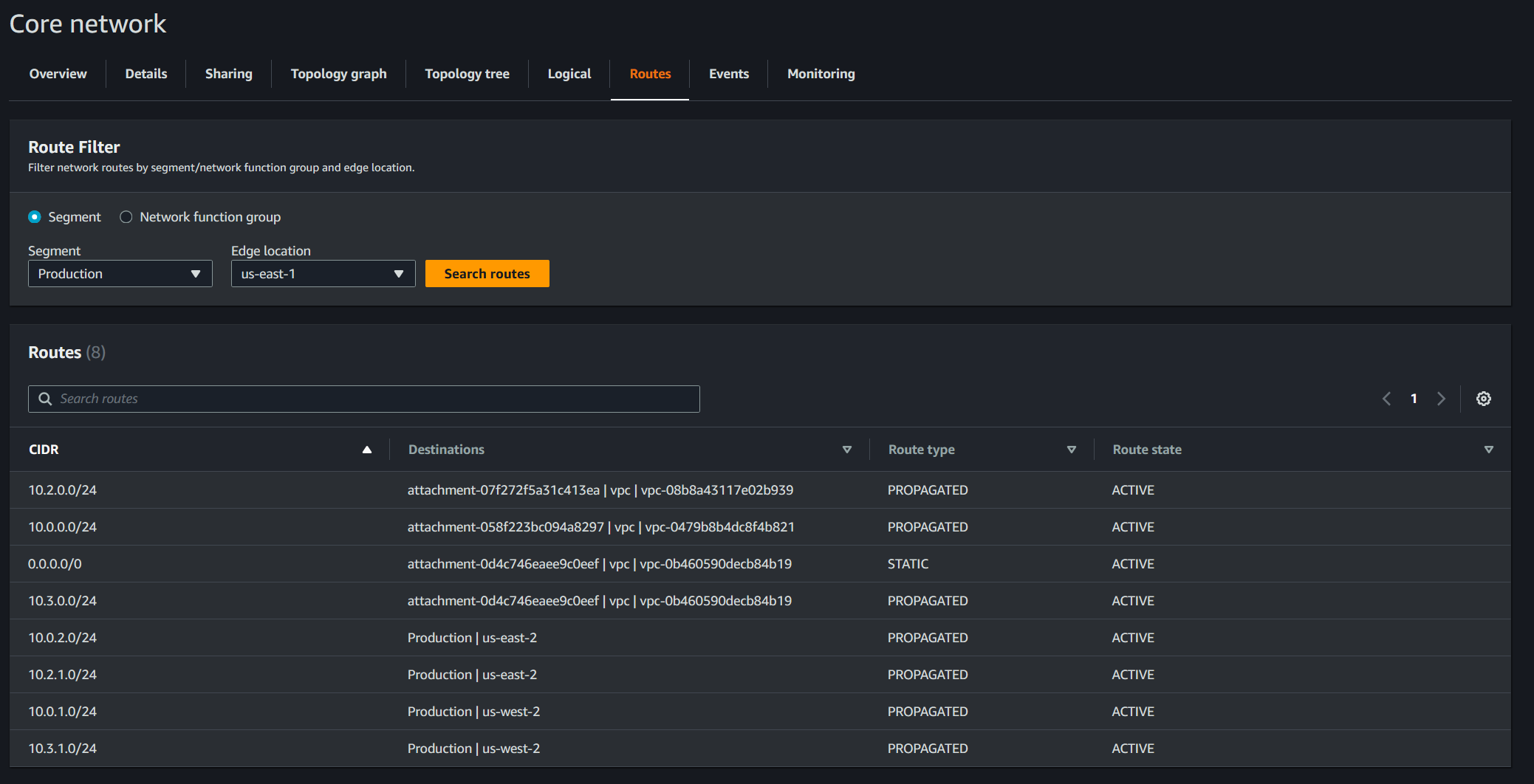

Among other tidbits of detail available to see within the console for Cloud WAN networks, a final one to highlight is the visibility of routes created automatically. This view really drives home the benefit of the abstraction of the management of the Transit Gateways discussed earlier, because despite the relative simplicity of this proof-of-concept design there is still a fairly large number of individual routes created for each region, segment, and the entire system. The image below shows all of the routes created just for VPCs in us-east-1 attached to the Production segment. It includes routes to other Production VPCs, Shared Services, and a default route pointing towards the Egress attachment for internet connectivity through the centralized NAT Gateways. As you can imagine, the count of these routes created would scale dramatically with more VPCs and more segments, while the management of the core network policy remains very manageable. Additionally, each of the “PROPOGATED” routes are created based on what segments are allowed to talk to each other or within themselves, while the “STATIC” route here was created from the custom route creation within the Cloud WAN core network policy. Despite needing to create the static route in this case to direct the default route to the egress segment, this only needs to be done once and will automatically replicate to all VPCs and segments that the route is configured to apply to.

Challenges and Lessons Learned

- AZ's have randomized alias per account and region (i.e. us-east-1a), so you must use the AZ ID (i.e. use1-az1) to ensure proper mapping of AZ support between VPCs, especially in segments such as Shared Services.

- If you attempt to deploy VPC attachments via IaC within the same Terraform workspace as the Cloud WAN Core Network deployment, there is a bit of a race condition that is difficult to overcome, therefore it is recommended to have a dedicated deployment of the Cloud WAN resources followed by any VPC resources.

- The Cloud WAN Core Network Policy takes a while to finish deploying (~15-30+ minutes) and must be fully completed before any VPC attachments can be created

- Centralizing the VPC endpoints in Shared Services requires DNS support and DNS hostnames to be set to True in other VPCs (i.e. Production and Non-Production VPCs), along with a dedicated Route 53 Private Hosted Zone for each Endpoint that is shared with all VPCs within those other segments that need to use that Endpoint.

- Many if not all Cross-Region endpoints will fail due to a certificate error because the endpoints are region-specific.

- Cross-region egress through shared NAT Gateways is possible but adds measurable (not necessarily intolerable) latency.

- Centralized Egress is much easier to configure than centralized Ingress which requires much more substantial appliances to properly route inbound traffic from the internet, and also negates some of the benefit of services like CloudFront by forcing a decentralized service to be centralized. We generally recommend that ingress web traffic stays decentralized, and leverage AWS-native or 3rd party WAFs for additional protection.

- When migrating existing VPCs into a Cloud WAN network, the final step of the migration should be altering the VPC route tables to start sending traffic into the Cloud WAN network.

- Protecting permissions to the centralized networking infrastructure is critically important – GuidePoint can help define appropriate guardrails to ensure that only authorized individuals have the capacity to modify these network security configuration.

Key Takeaways

As organizations scale, their networks often become a tangled mess of VPC peering connections that don't scale well, leading to operational challenges. Transit Gateway (TGW) helps by centralizing connections, but as environments expand across regions with increasing demands for centralized ingress/egress and inspection, even TGWs grow complex and hard to manage. This lack of structure can create silos, increase costs, and make enforcing consistent policies across accounts difficult.

AWS Cloud WAN simplifies this by automating the deployment of managed TGWs and providing a unified global network layer. It connects VPCs, on-prem data centers, and remote sites while allowing centralized policy enforcement and traffic inspection. By consolidating NAT gateways, VPC endpoints, and other shared services, Cloud WAN reduces duplication and improves visibility. The result is a scalable, secure, and cost-effective way to manage growing, multi-account AWS environments, cutting through complexity with centralized control and automated connectivity. Stay tuned for a future blog post on latency testing within an AWS Cloud WAN network!